The NEural Aesthetic

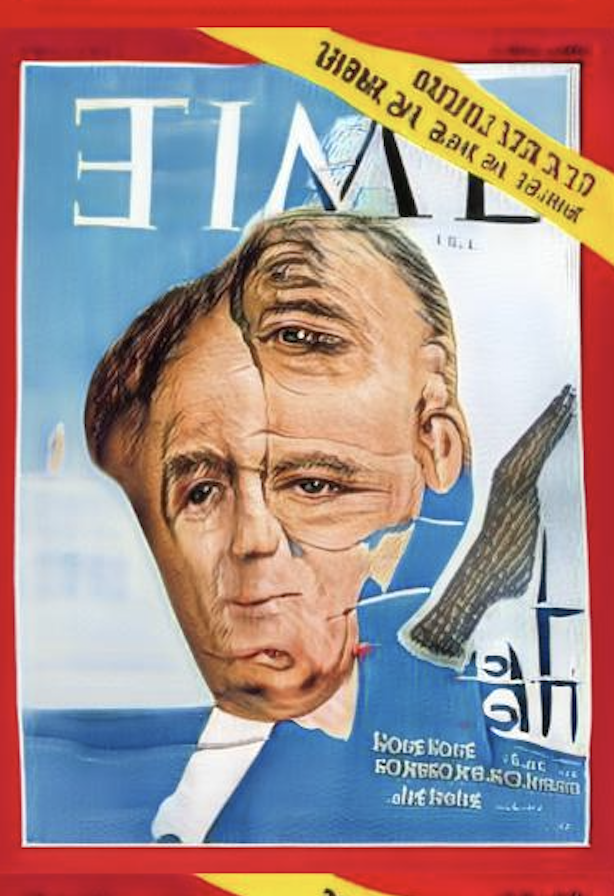

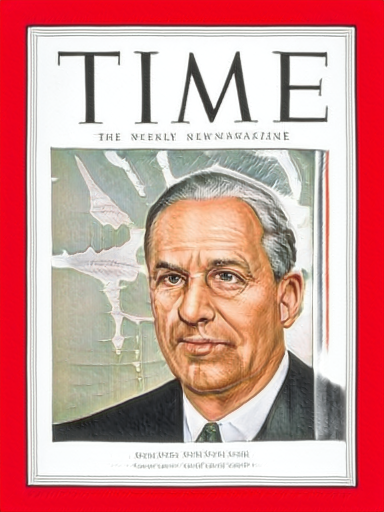

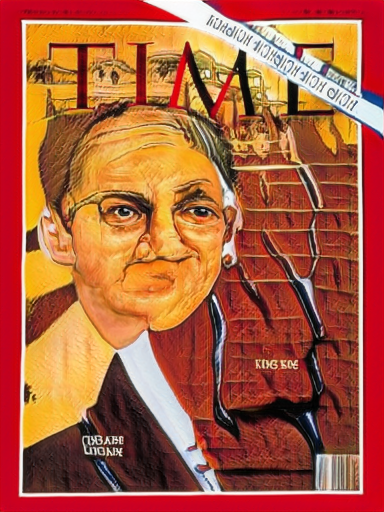

Time Magazine Deep FakeS

This project involves the use of 4 machine learning algorithms. StyleGan2-ADA trained on 4000 time magazine covers which can create fake almost realistic covers (at least black and white ones), GPT2 trained on up to 20 quotes from 2000 different subjects who have appeared on the cover of time magazine, as well as first order motion transfer and wav2lip to copy volunteer facial expressions and head movement onto the cover subjects while they read the quotes generated by GPT2.

Stylegan2-ADA

I started by training StylGan2-ADA in google colab and the HPC. The HPC results were superior but it was extremely difficult to get priority to train on multiple GPUs as everyone at NYU is working on finals and I do not have priority. I found that it was easier to train more slowly in Colab with 1 GPU.

The data set included ~4000 covers. I started at 256x256 resolution. Then jumped to 1024x1024. Results follow.

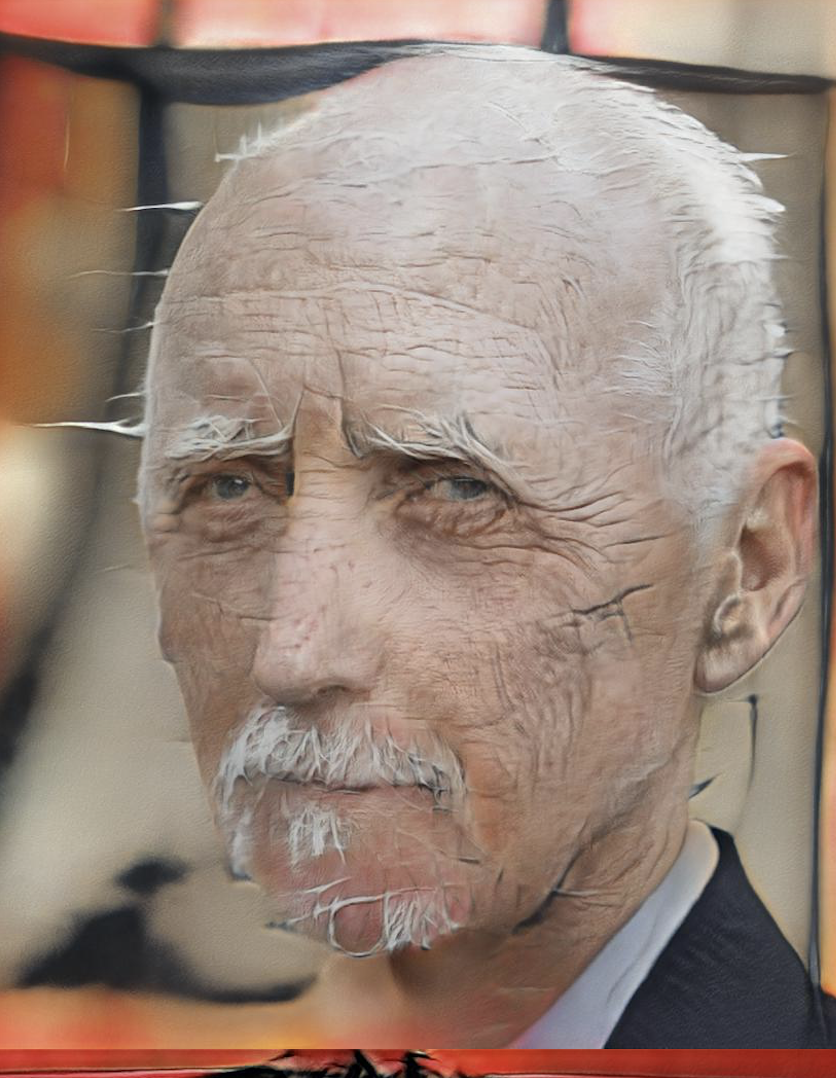

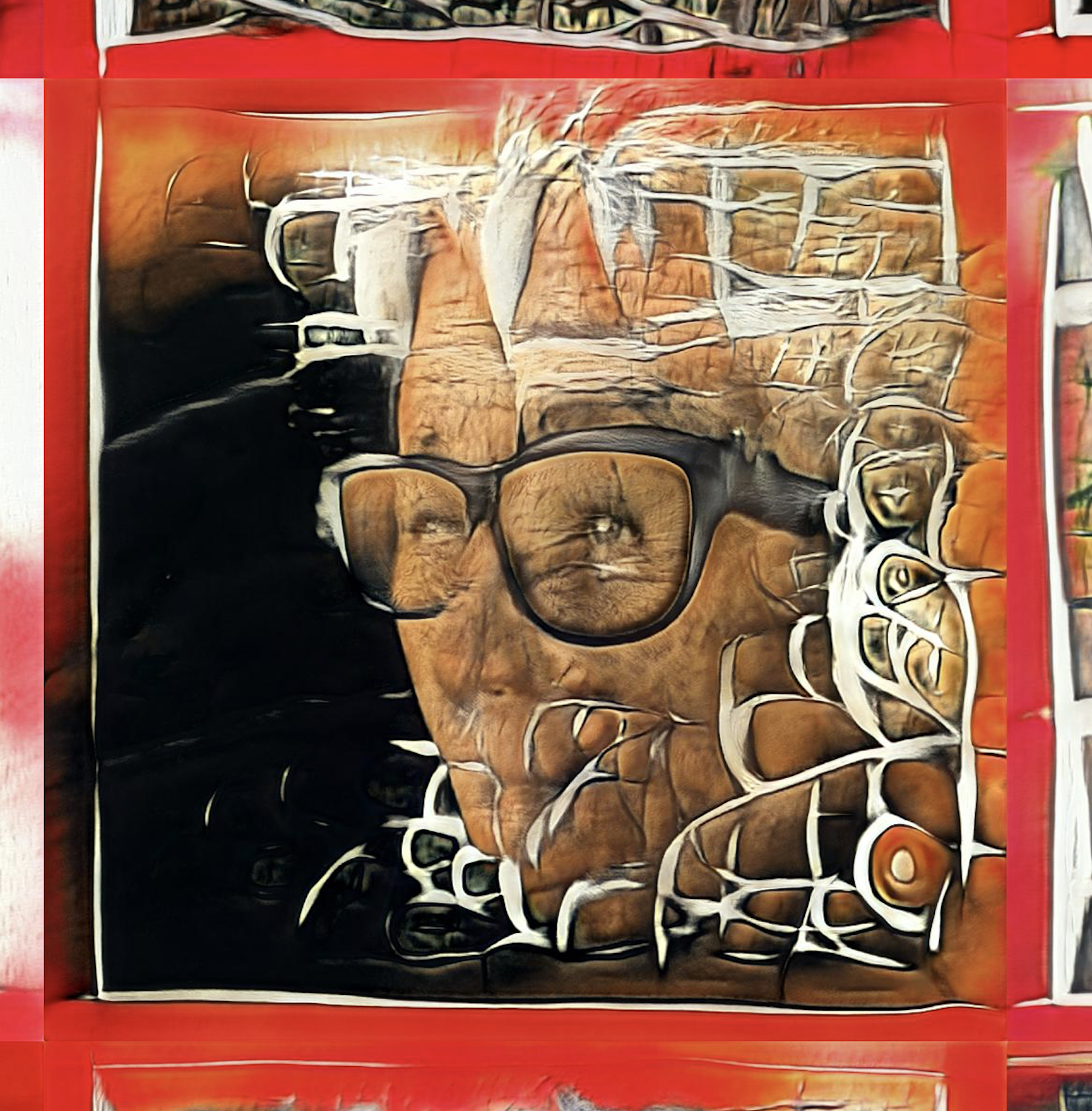

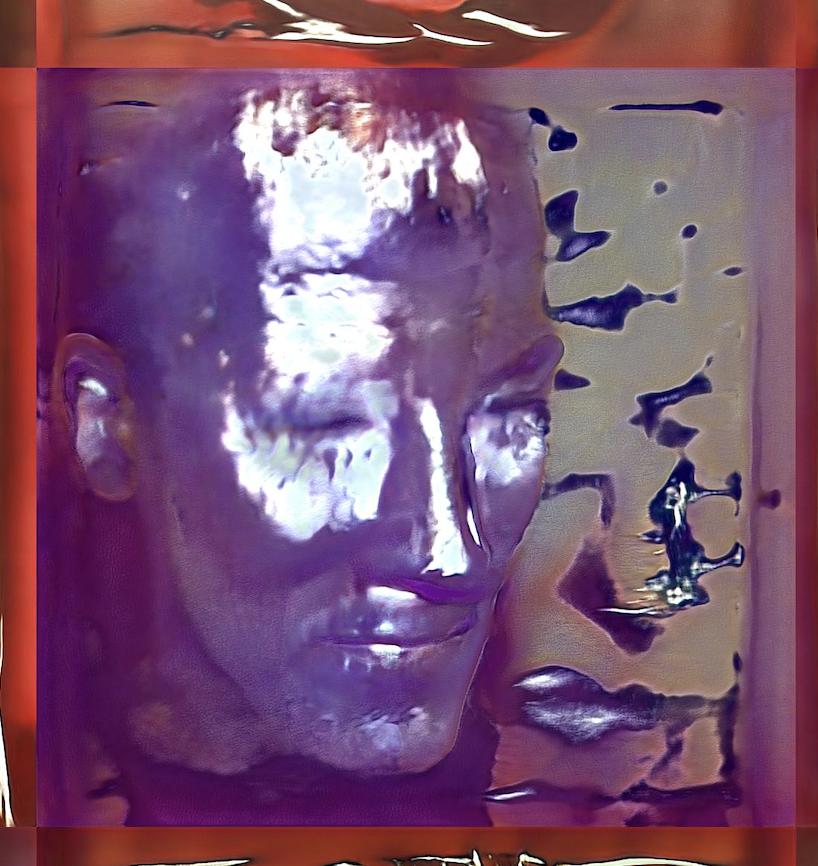

The 1024 version suffered from mode collapse very quickly but the results after 12 kimg of training were quite interesting looking. Training from the FFHQ faces dataset produced a mix between real humans and hd / realistic cartoon versions of those people.

I then started training a rectangular version of the data set (closer to the normal magazine shape) on a tweaked version of stylegan2-ada in order to optimize the resulting image (since I previously had to resize squares to the proper aspect ratio. The HPC queue to start a server has become much longer as the end of the semester approaches. In order to find the right configuration I have been running it in both the HPC and google colab at the same time. It seems that if you pay for colab pro you can get a v100 gpu most of the time and train fairly quickly. Below are some of the initial results.

When it seemed like the model was starting to collapse, I fine tuned on the subset of black and white covers to astonishingly realistic results.

GPT-2

While Stylgan2-ADA trained, I wrote a scrapper to collect up to 20 quotes from over 2000 time magazine cover subjects. I fine tuned GPT-2 for a couple days and produced a model that can spit out fairly realistic and often funny “quotes” that are not real. Below are some representative examples. The quotes skew to be almost entirely first person and often political.

Power leads to more power, no matter what your racket, and not only were they rich and influential but they were smart as hell, too.

The American people voted to protect themselves against the lunatic epidemic.

Girl, you better check yourself before you wreck me.

I am a conservative, but I am not a zombie.

Who in the world am I? Ah, that's the great puzzle!

I believe in manicures. I believe in overdressing. I believe in primping at leisure. I believe in Martian poetry. Everything is so fucking true.

My God, what am I doing here? It feels so surreal. I can’t find a way to explain this to people. I can’t figure out how to explain it. I remember when I started, it was just looking at the guys who were watching the monitor and who were like, 'What the hell is this guy doing? Is he a robot?'

Good for you, you have a heart, you can be a liberal. Now, couple your heart with your brain, and you can be a conservative

If the whole world would get warmer and warmer – that is the world for us.

The fate of America is here. I will not yield to anyone about guns in our society.

I find that I would like to be able to take whatever I want and make it whatever I want.

I'm not big on compromise. I understand compromise. Sometimes compromise is the right answer, but oftentimes compromise is the equivalent of defeat, and I don't like being defeated.

The physical world is real, not unreal, and cannot be ignored.

Hello you sick twisted freak

****** After training and recording the videos below, I realized my model suffered from overfitting and about half of the quotes above were present in the input dataset. It appears that things like this happen with GPT2 when your dataset is both small and also is part of the dataset that was used to train the base GPT2 model checkpoint That was used to fine tune********

First Order Motion & Wav2Lip

I then had friends record a video of their face while they spoke selected quotes. I took these videos and fed them into a first order motion transfer, and in some cases also a lip to wav model in order to produce deep faked talking heads.

The black and white outputs were ideal because you can crop just the face portion for the motion transfer. If you use the generated color covers, you have to crop the head then insert it back into the background adding an edge on the top otherwise the face will impact the entire image not just the head (see TimeGanWW2).

****This is a glen beck quote and slipped into the model output due to overfitting

****Actual GPT-2 quote

***This is a Trump quote and snuck into the output due to overfitting.

***Actual GPT-2 quote

Time GAN : The Man

A latent walk through time magazine covers

For the midterm project, I created a GAN model trained with a Time Magazine cover data set (every Time magazine cover with a face on it from 1940-2020) which I scraped from Time.com. The results boil down to a generic white man who I am calling “The Man”. The images were fed into style-gan 1 on Runway ML from the portraits checkpoint.

This project, which I plan on continuing to develop with more powerful compute resources and stylegan2, exemplifies the inherent bias in artificial intelligence systems. The algorithm itself is not predisposed to be racist and only create covers with white men but the data set is representative of American culture over the past 80 years which is a history of suppression of BIPOC/POC/female voices. AI systems are already impacting our lives in ways we do not fully comprehend from police forces predicting repeat offenders and where crime will take place, to employers searching for potential candidates. It is important to realize that these systems can be created with the best intentions but result in prejudice predictions that target white men for jobs and BIPOC men for future criminals creating feedback loops which reinforce systemic oppression with a side of plausible deniability due to the black box nature of artificial intelligence algorithms.

After training the model, I created a hosted endpoint on Runway ML and a p5.js sketch that accesses the endpoint to generate unique fake time magazine covers. I then hosted the p5 sketch on github sites and embedded it into my website using an iFrame. If you access this page, you will see unique Time magazine covers which crossfade between the currently displayed cover and the next generated cover, you are generating the images by accessing the website. If you add “?frame” to the end of the url, it will pass the parameter through the iFrame into github into the hosted p5 code and just generate one frame / cover.

If you want to see the site in action, you might have to wait a minute or so after loading for the Runway model to “wake up”.